In a first, Google is trusting a self-taught algorithm to manage part of its infrastructure.

Google revealed that it has given control of cooling several of its leviathan data centers to an AI algorithm.

Now, Google says, it has effectively handed control to the algorithm, which is managing cooling at several of its data centers all by itself.

“It’s the first time that an autonomous industrial control system will be deployed at this scale, to the best of our knowledge,” says Mustafa Suleyman, head of applied AI at DeepMind, the London-based artificial-intelligence company Google acquired in 2014.

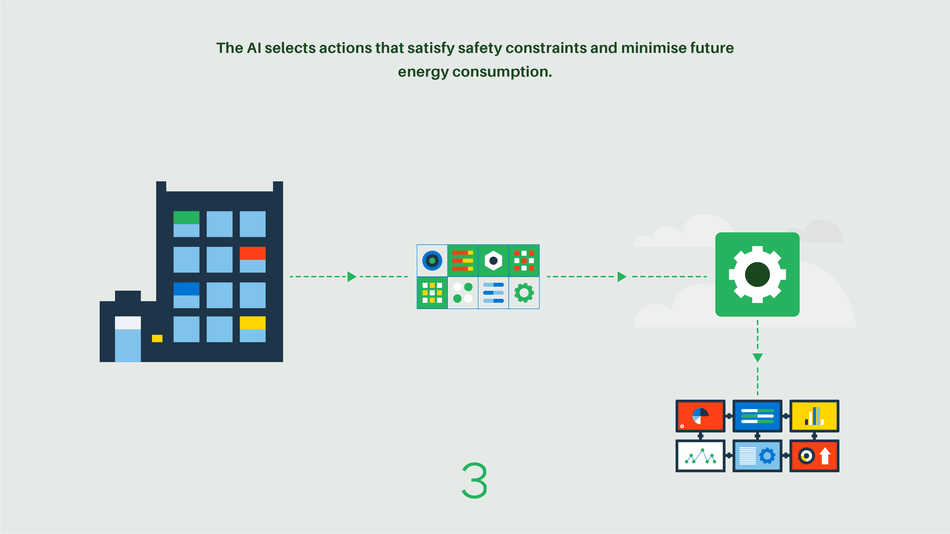

The project demonstrates the potential for artificial intelligence to manage infrastructure—and shows how advanced AI systems can work in collaboration with humans. Although the algorithm runs independently, a person manages it and can intervene if it seems to be doing something too risky.

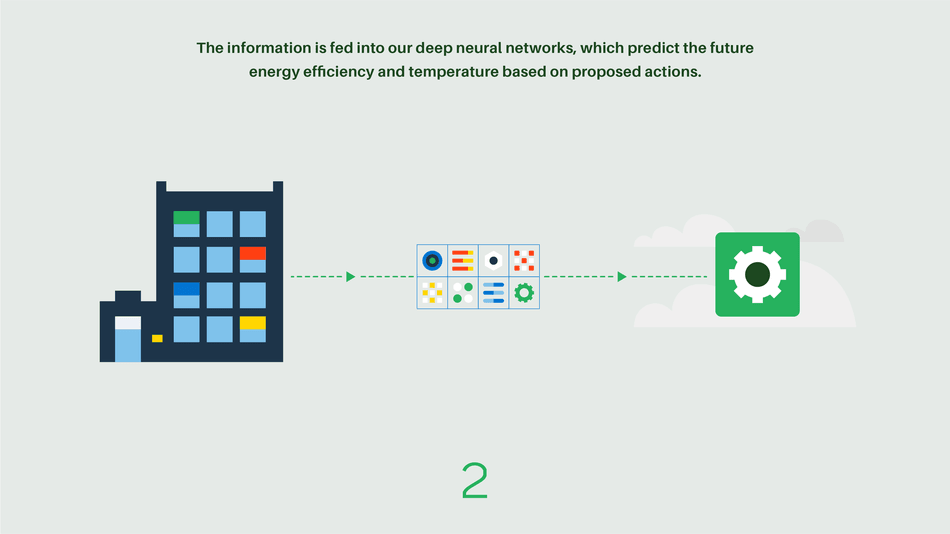

The algorithm exploits a technique known as reinforcement learning, which learns through trial and error. The same approach led to AlphaGo, the DeepMind program which vanquished human players of the board game Go.

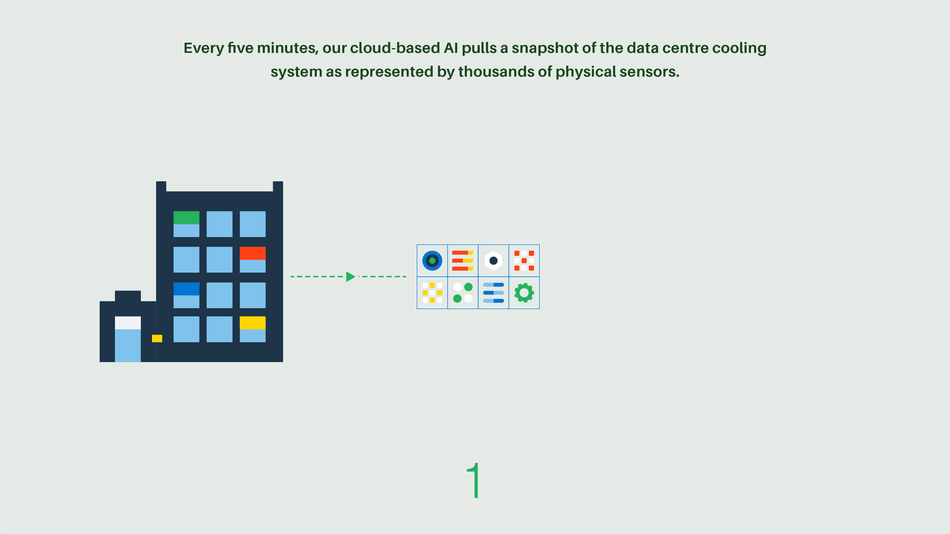

DeepMind fed its new algorithm information gathered from Google data centers and let it determine what cooling configurations would reduce energy consumption. The project could generate millions of dollars in energy savings and may help the company lower its carbon emissions, says Joe Kava, vice president of data centers for Google.

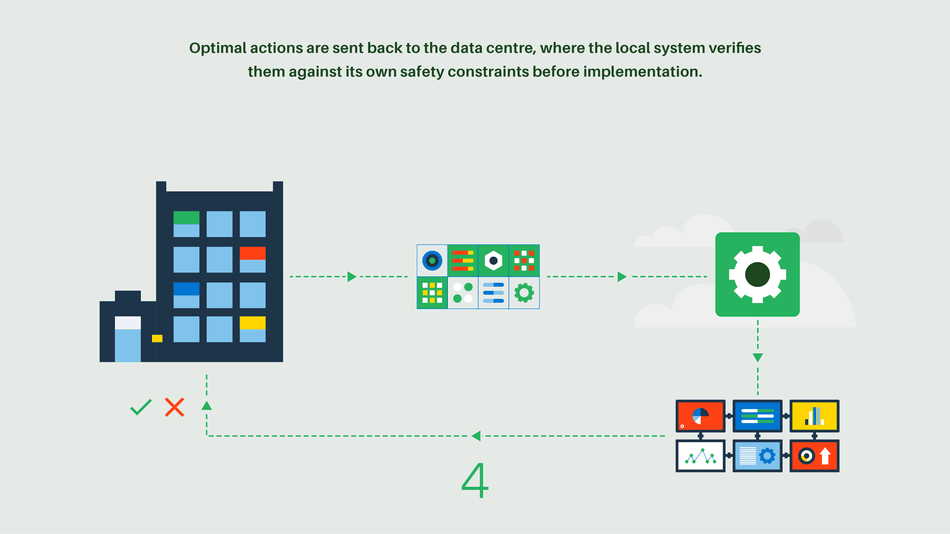

Kava says managers trusted the earlier system and had few concerns about delegating greater control to an AI. Still, the new system has safety controls to prevent it from doing anything that has an adverse effect on cooling. A data center manager can watch the system in action, see what the algorithm’s confidence level is about the changes it wants to make, and intervene if it seems to be doing something untoward.

Energy consumption by data centers has become a pressing issue for the tech industry. A 2016 report from researchers at the US Department of Energy’s Lawrence Berkeley National Laboratory found that US data centers consumed about 70 billion kilowatt-hours in 2014—about 1.8 percent of total national electricity use.

But efforts to improve energy efficiency have been significant. The same report found that efficiency gains are almost canceling out increases in energy use by new data centers, although the total is expected to reach around 73 billion kilowatt-hours by 2020.

“Use of machine learning is an important development,” says Jonathan Koomey, one of the world’s leading experts on data center energy usage. But he adds that cooling accounts for a relatively small amount of a center’s energy use, around 10 percent.

Koomey thinks using machine learning to optimize the behavior of the power-hungry computer chips inside data centers could prove even more significant. “I’m eager to see Google and other big players apply such tools to optimizing their computing loads,” he says. “The possibilities on the compute side are tenfold bigger than for cooling.”

By Will Knight